MDR, IVDR, and the AI Act: What MDCG 2025-6 Means for Medical Device Software

Introduction

The European Union’s release of MDCG 2025-6 has reshaped the regulatory landscape for medical device software—and if your product includes any artificial intelligence, this isn’t just an update. It’s a survival checklist.

Gone are the days when medical device software (MDSW) only had to navigate MDR or IVDR. Enter MDAI: Medical Device Artificial Intelligence—a new label for machine-learning-enabled devices now potentially subject to the high-risk AI category under the AIA (Artificial Intelligence Act).

Whether you manufacture an AI-powered wearable, health-tracking mobile app, or diagnostic algorithm embedded in imaging software, this framework impacts your clinical evaluation strategy, technical documentation, risk management, and post-market responsibilities.

The transition is not optional. It’s mandated by August 2027.

What is MDCG 2025-6?

MDCG 2025-6 is a 27-page FAQ—not a guidance document—that clarifies how to reconcile compliance between the MDR/IVDR regulations and the new AI Act. While the tone is FAQ-like, its content is structured more like a checklist for implementation.

And rightly so. The regulatory weight behind this document is undeniable.

Think of it as a Rosetta Stone for navigating AI’s role in regulated medical devices in Europe. But unlike most guidance, this one comes with hard timelines, practical implementation expectations, and layers of accountability that go beyond CE marking.

MDAI: A New Class Emerges

If your software includes machine learning and is regulated under MDR or IVDR, it’s now considered Medical Device Artificial Intelligence (MDAI).

This reclassification does more than change the name. It escalates the regulatory burden, moving your device into the AIA’s “high-risk” category. And with that come requirements around:

Ethical risk

Fundamental rights

Bias monitoring

Algorithmic transparency

Even if you’ve already cleared MDR/IVDR hurdles, you’re not done yet. The AIA adds a second compliance layer that dives deep into how your AI learns, evolves, and performs over time.

Who is Affected by MDCG 2025-6?

Short answer? Almost everyone working in digital health:

Manufacturers of AI-based medical devices

Software developers creating modules or apps for diagnosis, monitoring, or therapy

Companies producing accessories for MDAI or Annex XVI devices

Deployment companies running these devices in clinical or real-world settings

High-Risk Classification under AIA

Under the AIA, any algorithm that influences health decisions—especially if it evolves after deployment—can be classified as high-risk. This includes:

ML-powered diagnostics

Triage algorithms

Treatment decision support tools

Real-time monitoring platforms

If that’s you, prepare for profound changes in compliance routines.

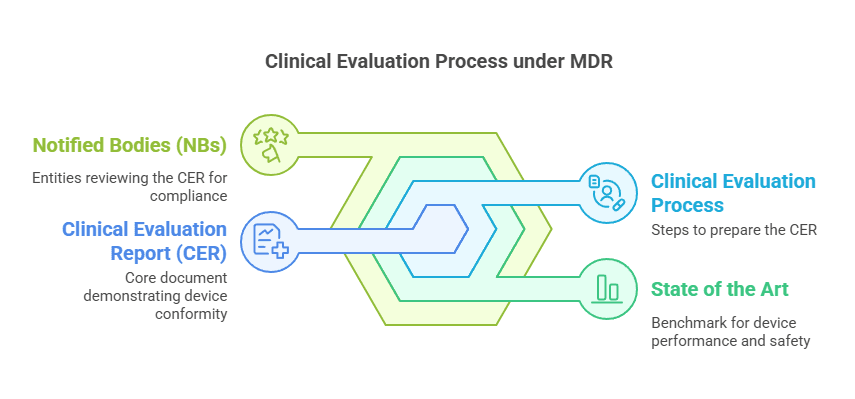

Clinical Evaluation Under Pressure

The MDR requires clinical evaluation. But with the AIA added, that evaluation must now also show:

Explainability: What the algorithm does and how it does it

Dataset Fairness: No bias, no blind spots

Human Oversight: Yes, even a “stop” button counts

Ongoing Data Governance: Document, validate, test, and monitor

And don’t forget: if your device continues learning post-market, you’ll need Predefined Change Control Plans (PCCPs). No exceptions.

Technical Documentation Now Has an AI Flavor

Gone are the days of static PDFs. Your technical file must now evolve alongside your AI. That includes:

AI-specific risk assessments

Human-rights impact analysis

Performance metrics aligned with intended purpose

Lifecycle control documents

Logging and behavioral tracking

Even better? MDCG 2025-6 allows you to embed these into your MDR/IVDR files—but only if structured correctly.

Timeline for Compliance

✅ 2025

Start aligning your QMS with AIA

Audit your clinical data for AI relevance

✅ 2026

Build PCCPs and QMS integrations

Update labeling and user roles

✅ 2027 (August)

All high-risk MDAI must fully comply with AIA

Post-market monitoring becomes law, not best practice

Key Terms: ‘User’ vs. ‘Deployer’

MDR: Defines a user as a professional or patient.

AIA: Defines a deployer as the entity using the AI system under their control.

If your documentation treats them interchangeably, auditors will notice. Define both clearly in your QMS and conformity declarations.

FAQs

What does MDCG 2025-6 require me to do?

It mandates that manufacturers embed AIA requirements into their MDR/IVDR documentation and processes.

Does the AIA apply to all medical devices?

Only those considered MDAI—i.e., medical devices using AI, especially machine learning.

Is clinical evaluation still mandatory?

Yes, and it now requires explainability, bias control, and oversight documentation.

When is the compliance deadline?

August 2027, with preparation starting immediately.

Can I reuse my MDR files for AIA compliance?

Yes, if you integrate AIA layers completely and traceably.

What happens post-2027?

Significant changes trigger a fresh conformity assessment, even if CE marking was previously granted.

Final Thoughts

MDCG 2025-6 changes the game. This isn’t about adding a page or two to your technical documentation. It’s about reinventing your compliance architecture to reflect a new reality: AI is here, and it’s regulated.

Start today. Build smart. And if your device learns, you’d better make sure your team does too.

PS: For more information, subscribe to my newsletter and get access to exclusive content, private insights, and expert guidance on MDR compliance and CE marking: Subscribe Here

✌️ Peace,

Hatem Rabeh, MD, MSc Ing

Your Clinical Evaluation Expert & Partner

Follow me for more insights and practical advice!