Clinical Evaluation vs. Clinical Investigation for AI-Embedded Medical Devices under EU MDR

Introduction and Regulatory Context

The EU Medical Device Regulation (MDR, Regulation (EU) 2017/745) establishes rigorous requirements for demonstrating the safety and performance of medical devices, including those incorporating artificial intelligence (AI).

Two key concepts in this regulatory framework are clinical evaluation and clinical investigation. While closely related, they serve different purposes. A clinical evaluation is a continuous, lifecycle-long process of assessing clinical data to verify a device’s safety and performance, whereas a clinical investigation is a specific method of generating new clinical data (often a formal clinical study with human subjects) to support the clinical evaluation (health.ec.europa.eu).

This blog post provides a detailed overview of the differences between clinical evaluation and clinical investigation for AI-embedded medical devices, in line with MDR requirements. It references relevant MDR Articles (notably Article 61 for clinical evaluation and Article 62 for clinical investigations), key guidance documents (MDCG 2020-1, MDCG 2020-6, MEDDEV 2.7/1 Rev.4), and standards (ISO 14155 for GCP in device trials). Special attention is given to how these concepts apply to AI-based software devices, considering their adaptive and data-driven nature.

Definitions under MDR

Clinical Evaluation (MDR): The MDR defines clinical evaluation as “a systematic and planned process to continuously generate, collect, analyse and assess the clinical data pertaining to a device in order to verify the safety and performance, including clinical benefits, of the device when used as intended by the manufacturer.”

In simpler terms, it is an ongoing assessment of all relevant clinical evidence for a device throughout its lifecycle. Importantly, MDR emphasizes that clinical evaluation is not a one-time event but a continuous process that must be maintained and updated as new information becomes available (health.ec.europa.eu).

This process is part of the manufacturer’s quality management system and is required for all medical devices, from low-risk to high-risk, including AI-driven software.

Clinical Investigation (MDR): By contrast, a clinical investigation (sometimes called a clinical trial or clinical study for devices) is defined in MDR as “any systematic investigation involving one or more human subjects, undertaken to assess the safety or performance of a device.”(health.ec.europa.eu)

In practice, a clinical investigation is one method of generating clinical data – typically a prospective study conducted in a clinical setting with human participants. Clinical investigations are governed by strict protocols (often called Clinical Investigation Plans, CIPs) and must be conducted in accordance with Good Clinical Practice (GCP). Under MDR Article 62, clinical investigations carried out to demonstrate conformity must be designed to show that the device is suitable for its intended purpose and that any risks or side-effects are acceptable in light of the benefits. These studies are expected to follow ethical and scientific quality standards such as ISO 14155:2020, which is the international GCP standard for medical device trials (bsigroup.com).

In essence, a clinical investigation is one source of clinical data feeding into the broader clinical evaluation process.

Relationship: In the MDR framework, every medical device requires a clinical evaluation, but not every device will require a new clinical investigation. Clinical evaluation is the overarching process – a mandatory, continual activity – whereas a clinical investigation is a potential component of that process, undertaken if needed to gather additional clinical evidence. A useful way to differentiate them is: clinical evaluation is always required, and it may include or lead to a clinical investigation if the existing clinical evidence is insufficient.

For example, MDR Article 61(1) explicitly requires manufacturers to plan and conduct a clinical evaluation for each device as part of the conformity assessment (health.ec.europa.eu).

On the other hand, MDR Article 61(4) and (5) stipulate that a new clinical investigation is generally expected for novel high-risk devices (e.g. Class III or implantable devices), unless reliance on existing clinical data and equivalence to an already marketed device can be justified (health.ec.europa.eu).

In summary, clinical evaluation is an all-encompassing evidence assessment process, whereas a clinical investigation is a discrete study to collect clinical data, usually invoked when the clinical evaluation identifies a need for more evidence.

Clinical Evaluation: A Continuous Lifecycle Process

Continuous Process and Lifecycle Management: Under EU MDR, clinical evaluation is a proactive and continuous process that spans the entire product lifecycle. It begins in the development phase, is crucial to obtaining CE marking (pre-market), and continues post-market through periodic updates and post-market clinical follow-up (PMCF). The MDR (Annex XIV, Part A) and guidance documents make clear that clinical evaluation is not a “one-and-done” report, but an ongoing cycle. As noted in MDCG 2020-6, “clinical evaluation is a process where [the] qualified assessment has to be done on a continuous basis.”(health.ec.europa.eu)

Similarly, industry guidance highlights that under the MDR (unlike under the old MDD), manufacturers must update the clinical evaluation with post-market data – “it is now necessary to test a product not only before its release but also after it is on the market. Clinical evaluations are an ongoing and continuous process, and the follow-up process is known as Post-Market Clinical Follow-up (PMCF).”

In fact, MDR Annex XIV Part B explicitly states: “PMCF shall be understood to be a continuous process that updates the clinical evaluation referred to in Article 61…”.

This means manufacturers of AI-based devices must have procedures to continually gather real-world clinical data and feed it back into the evaluation to ensure the device remains safe and effective in light of any new information.

Purpose of Clinical Evaluation: The fundamental goal of a clinical evaluation is to demonstrate that, for its intended purpose and target population, the device achieves its intended clinical benefits and performance metrics without posing unacceptable risks. In regulatory terms, the clinical evaluation supports confirmation of conformity with the relevant General Safety and Performance Requirements (GSPRs) of the MDR Annex I.

This includes showing that any known side-effects or risks are acceptable when weighed against the benefits. The clinical evaluation also helps define the device’s benefit-risk profile and ensures that any claims about clinical performance or outcomes are substantiated by evidence.

For AI-driven devices, this includes claims about diagnostic accuracy, prognostic capabilities, or therapeutic recommendations provided by the algorithm. Manufacturers must also use the clinical evaluation to identify residual risks, uncertainties, or need for improvement; for example, it can reveal if certain patient subgroups were not well-represented in the data or if the device’s performance drifts over time, prompting risk mitigation or further study.

Sources of Clinical Data: A hallmark of clinical evaluation is that it draws on all available clinical data relevant to the device. MDR Article 2(48) defines clinical data broadly to include information from: (a) clinical investigation(s) of the device in question, (b) clinical studies or investigations in literature for equivalent or similar devices, (c) peer-reviewed literature on clinical experience of the device or equivalent devices, and (d) post-market surveillance data (especially PMCF) for the device.

In practice, a clinical evaluation will gather evidence from sources such as:

Published scientific literature (e.g. clinical studies, case series, systematic reviews) on the device or comparable devices.

Clinical experience or registry data, including any compassionate use or expert user feedback available.

Previous clinical investigations or trials – either done by the manufacturer or reported by others – that demonstrate safety/performance.

Equivalence data: data from another device already marketed, if the device under evaluation can be demonstrated to be equivalent in safety/performance characteristics (note: MDR has stricter rules for claiming equivalence than the old directives).

Post-market data, such as complaint records, adverse event reports, or outcomes from PMCF studies and registries once the device is on the market.

For AI software devices, additional relevant data sources can include results of algorithm validation using retrospective datasets, real-world evidence from clinical deployments, and even simulated data if appropriately justified. The MDR-oriented guidance (MDCG 2020-1) on clinical evaluation of medical device software suggests using sources like scientific literature, clinical databases, and expert opinions to establish the scientific validity of the algorithm’s outputs.

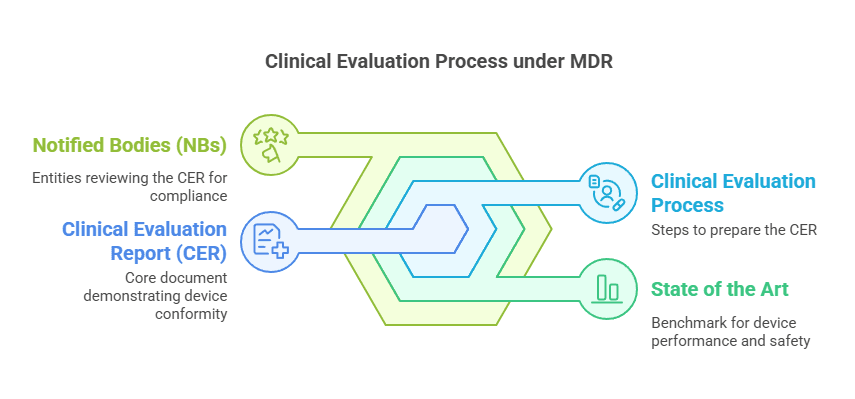

Methodology – Planning, Appraisal, Analysis: Conducting a clinical evaluation is a methodical exercise. MDR Article 61 and Annex XIV require that it follows a defined and methodologically sound procedure, which manufacturers must document in a Clinical Evaluation Plan (CEP) and resulting Clinical Evaluation Report (CER) (health.ec.europa.eu).

The widely used guidance MEDDEV 2.7/1 Rev. 4 (2016) – which remains a cornerstone for clinical evaluation methodology under MDR – outlines a systematic approach in several stages:

Scoping (Stage 0): Define the device, its intended use, claimed clinical benefits, and identify what clinical questions need to be answered. For an AI device, this includes understanding the algorithm’s purpose (e.g. diagnose X disease), the population, and context of use.

Identification of pertinent data (Stage 1): Conduct comprehensive literature searches and collect all relevant pre-market and post-market data on the device and equivalents. This may involve database queries for studies on similar AI algorithms in healthcare, etc.

Appraisal of data (Stage 2): Rigorously assess the quality and relevance of each data set. For example, rate the scientific credibility of a published study (Was it peer-reviewed? Sample size? Biases?) and determine if the results are applicable to the device’s intended use. MDR (and MEDDEV) call for weighting evidence based on factors like study design, population match, and data robustness.

Analysis of the clinical data (Stage 3): Synthesize all accepted data to determine what they collectively say about the device’s safety, performance, and benefit-risk profile. Identify whether the clinical evidence is sufficient to meet MDR’s requirements. Any gaps or unanswered questions are noted here.

Conclusion and Clinical Evaluation Report (Stage 4): Document the findings in a CER, which should justify that the device conforms to the relevant safety and performance requirements, or explain what additional steps (e.g. a new clinical investigation or post-market studies) will be taken to address any gap. The CER is a key part of the technical documentation reviewed by Notified Bodies during conformity assessment.

Notably, the Clinical Evaluation Plan is now explicitly required under MDR (Annex XIV, Part A). The CEP outlines how the manufacturer will perform the evaluation, the data sources, the criteria for appraising literature, and it should address special scenarios like devices incorporating novel technology (such as machine learning algorithms). For AI devices, the CEP might need to include plans for algorithm performance validation (e.g. using retrospective dataset studies) and criteria for ongoing performance monitoring in the field.

Regulatory Expectations: Regulators and Notified Bodies expect that the clinical evaluation demonstrates sufficient clinical evidence for the device’s intended claims. “Sufficient clinical evidence” means the available data are robust and relevant enough to assure the device’s safety and clinical performance (including its benefits) for the target patients.

MDR does not define a fixed quantity of data; instead the manufacturer must “specify and justify the level of clinical evidence necessary”, taking into account device characteristics and risk(health.ec.europa.eu).

For an AI-embedded device, this justification might consider the novelty of the algorithm, the clinical context, and the existence (or lack) of precedent devices. If, through the evaluation, the evidence is deemed insufficient, the manufacturer is obliged to address this – often by generating new data via a clinical investigation or bolstering PMCF activities. Regulators also require that the clinical evaluation be updated at defined intervals or continuously for high-risk devices. Many companies establish a schedule (e.g. annual or biennial CER updates) or trigger updates when significant new information arises (such as a major software update for an AI algorithm or new published studies).

In summary, clinical evaluation under MDR is a comprehensive, ongoing assessment. It ensures that at any point in the device’s life, the manufacturer has up-to-date knowledge of how the device is performing clinically and whether it continues to meet safety and performance requirements. For AI devices, this process must account for algorithm behavior and real-world usage data, making the continuous evaluation paradigm especially pertinent.

Clinical Investigation: Purpose and Execution

When a Clinical Investigation is Needed: A clinical investigation is typically initiated when the clinical evaluation identifies a need for new clinical data that cannot be obtained from existing sources. MDR Article 61(4) makes it clear that for certain high-risk devices (notably Class III and many Class IIb implantables), pre-market clinical investigations are expected by default to demonstrate conformity, unless an exemption applies.

Exemptions might include cases where the device is a modified version of an existing marketed device and equivalence can be claimed with sufficient data, or where the device is “legacy” (previously CE-marked under MDD/AIMDD) and already has a lot of clinical data.

Even for lower-risk devices, if the manufacturer’s clinical evaluation shows insufficient evidence of safety or performance, a clinical investigation is warranted before the device can be marketed.

In other words, a new clinical study must be performed whenever the existing clinical data (from literature, prior use, etc.) are inadequate to support the device’s claims or risk-benefit profile. As one industry source explains, a clinical investigation is usually “necessary when the notified body examining the CER decides there is insufficient evidence of efficacy or safety in the studies provided… When this occurs, a clinical investigation may be done to further research the device and ensure it meets MDR standards.”.

This could be the case for a novel AI-driven diagnostic tool where no equivalent device exists – the only way to gather evidence might be to conduct a prospective clinical study using the AI device on patients and measuring outcomes.

Additionally, clinical investigations might be required for devices already on the market if there are significant changes or new information. For example, if an AI algorithm is substantially updated (new features or retrained on a new data set), or if new intended uses/patient populations are envisioned, regulators may expect a new clinical investigation or a broadened PMCF study to validate these changes.

MEDDEV 2.7/1 Rev.4 provides a useful list of indications that a clinical investigation is likely needed, including: a completely new design or novel technology, new intended purpose or claims, new target patient groups, use of new materials, higher risk interventions, or other situations where existing data cannot cover the device’s specific risk/benefit questions.

Many of those criteria would apply to cutting-edge AI devices (e.g., first-of-kind machine learning algorithms for diagnosis would certainly require clinical studies to validate their real-world performance).

Regulatory Oversight and Standards: Clinical investigations in Europe are governed by strict regulatory and ethical controls. MDR Article 62 and further provisions (Articles 63–80 and Annex XV) lay out general requirements for any clinical investigation intended to support device CE marking. These include obtaining ethics committee approval, competent authority authorization, informed consent of subjects, safety monitoring, and adherence to Good Clinical Practice (GCP). ISO 14155:2020 “Clinical investigation of medical devices for human subjects – Good Clinical Practice” is the key standard that defines GCP for device trials.

Following ISO 14155 is not a legal requirement per se, but it is state-of-the-art and compliance with it is effectively expected; in fact, following ISO 14155 is a way to ensure the investigation meets both ethical and scientific quality standards aligned with MDR. GCP principles aim to protect the rights, safety, and well-being of trial subjects and to ensure data integrity. For instance, ISO 14155 covers requirements for study protocol design, investigator qualifications, risk management, data recording, and reporting of results.

Under MDR, a device used in a clinical investigation must also comply with certain safety provisions (e.g., if it’s a prototype not yet CE marked, it still must adhere to general safety requirements and have risk controls in place as per Annex XV).

Conduct of a Clinical Investigation: A clinical investigation for a medical device (including AI-enabled devices) will typically involve human participants in a healthcare setting to collect data on how the device performs and to observe any clinical outcomes or adverse events. For therapeutic devices, this might resemble a traditional clinical trial (e.g., patients receiving a treatment and being followed-up for efficacy and side effects). For diagnostic AI software, a clinical investigation might mean a study in which the software’s diagnoses or risk predictions are compared against a gold standard and the impact on clinical decision-making is measured. Key elements include:

Clinical Investigation Plan (CIP): a detailed protocol describing the study design, objectives, endpoints (safety and performance metrics), subject criteria, procedures, and statistical analysis plan. Under MDR Annex XV, the CIP must include extensive information (justification, risk-benefit analysis, monitoring plan, etc.), similar in rigor to pharmaceutical trial protocols.

Approvals: Prior to commencing, the manufacturer (or sponsor) must obtain approval from an Ethics Committee and in many cases a notification or authorization from the National Competent Authority of the Member State where the trial will be conducted. Only when regulatory clearance is given can subject enrollment begin.

Good Clinical Practice Compliance: The investigation must be conducted per GCP. That means investigators are trained, patients give informed consent, data is collected reliably, and any adverse events are reported and analyzed. ISO 14155 provides guidance on all these aspects, and compliance with ISO 14155 is considered evidence of adhering to GCP. MDR Article 72 explicitly requires that investigations are conducted in accordance with good clinical practice consistent with international standards.

Data and Results: The outcome of a clinical investigation is typically a Clinical Investigation Report, which includes all the data analysis and findings. These results become part of the clinical evidence for the device. The manufacturer will incorporate the findings into the clinical evaluation – updating the CER to include the new clinical data and reassessing the benefit-risk profile in light of this data. As MDR states, the data generated and its evaluation are part of the lifecycle approach to clinical evidence.

It’s worth noting that clinical investigations can be pre-market or post-market. Pre-market studies aim to gather evidence for initial CE marking. Post-market clinical investigations (a subset of PMCF) might be conducted after CE marking to answer specific questions (for instance, long-term performance, or performance in a broader population). In the context of AI, a post-market clinical study might be set up to monitor the AI’s performance over time or to collect data in a new hospital environment. MDR Article 74 and 82 cover PMCF investigations and study reporting obligations.

Ethical Considerations: From a regulatory perspective, one important reason a clinical evaluation is performed before initiating an investigation is to ensure that a new clinical study on humans is ethically justifiable. By first exhausting existing knowledge through clinical evaluation, the manufacturer can design the clinical investigation in a way that avoids unnecessary risks to subjects. For example, if literature data already answers certain safety questions, the new investigation can focus only on the unanswered questions, minimizing redundant exposure of patients to potential risk. The clinical evaluation provides the scientific rationale for the study and helps in formulating a sound hypothesis and endpoint. In fact, as part of an investigation application, authorities often expect to see a summary of the prior clinical evaluation (sometimes in the form of a Clinical Evaluation Report excerpt or a justification in the CIP) explaining why additional clinical data are needed and how the proposed study will address that need. Thus, performing a thorough clinical evaluation first is not only a regulatory requirement but also a safeguard for trial participants, ensuring that human studies are done only when necessary and with proper context. According to industry guidance, “one way to differentiate… is to remember that a clinical evaluation is always necessary… it can lead to a clinical investigation if additional data is needed… but a clinical investigation is not always a part of the process.”

The MDR reinforces this principle by requiring that any absence of clinical data be justified and, if reliance on existing data is not adequate, a new investigation must be carried out.

Summary of Differences: To highlight the differences between clinical evaluation and clinical investigation in practice:

Nature: A clinical evaluation is an analytical process (mostly desk-based research and analysis of data), whereas a clinical investigation is an experimental process (prospective collection of data from human subjects).

Scope: Clinical evaluation covers all relevant data on the device (broad scope: literature, prior studies, etc.), while a clinical investigation focuses on a defined set of objectives (narrow scope: answer specific questions via a new study).

Timing: Clinical evaluation is iterative and ongoing (pre-market and post-market), updated throughout the device’s life. A clinical investigation is time-bound, with a start and end, often occurring pre-market (for novel devices) or as part of PMCF.

Requirement: Clinical evaluation is required for every device per MDR (no exceptions). Clinical investigations are required when needed – commonly for high-risk or novel devices or when existing evidence is lacking. For example, most low to medium risk devices may not need new investigations if literature and prior use provide sufficient evidence. High-risk devices (Class III/IIb implantables, etc.) almost always require an investigation unless an equivalent device’s data can be leveraged.

Regulatory Documentation: The outcome of clinical evaluation is the Clinical Evaluation Report (CER), part of the technical file for CE marking. The outcome of a clinical investigation is a Clinical Investigation Report (and potentially publications), which then feed into the CER. The CER is reviewed by the Notified Body in depth, whereas the conduct of a clinical investigation may also be subject to regulatory oversight by national authorities (via approvals and potential inspections for GCP compliance).

Standards and Guidance: Clinical evaluation processes are guided by MDR Annex XIV and MEDDEV 2.7/1 Rev.4 (and MDCG guidance like 2020-1 for software), emphasizing literature appraisal and scientific validity. Clinical investigations are guided by MDR Annex XV and ISO 14155 (GCP standard), emphasizing trial ethics, protocol quality, and data credibility. Both ultimately serve the same goal – demonstrating device compliance – but one is a continual analysis activity and the other is a data-gathering activity.

Special Considerations for AI-Embedded Medical Devices

AI-embedded medical devices (often software-based, such as diagnostic algorithms, clinical decision support tools, or “smart” therapeutic devices) introduce unique challenges and considerations in both clinical evaluation and clinical investigation. The MDR applies to AI-based devices just as to any other, but the adaptive and data-driven nature of AI software means manufacturers must pay particular attention to how they generate and assess clinical evidence.

Adaptive Algorithms and Continuous Learning: One characteristic of many AI systems, especially those using machine learning, is the ability to adapt or improve over time as they are exposed to new data. This can blur the line between pre-market and post-market performance. A key regulatory expectation is that the version of an AI algorithm that is CE-marked is “locked” (its learning weights or logic fixed) or at least controlled within known boundaries at the time of market approval. Any significant changes to the algorithm (e.g. retraining on new data, adding new input features) would typically require a new clinical evaluation – and possibly a new conformity assessment – to ensure the modified device still meets the requirements. Therefore, clinical evaluation for AI devices must be especially vigilant and iterative. The evaluation should establish not only current performance but also define the mechanism for monitoring performance as the AI is used in the real world. Post-market clinical follow-up is crucial here: for example, the manufacturer might deploy the AI in a limited setting initially and collect real-world performance metrics (accuracy, error rates, patient outcomes) as PMCF data, then update the clinical evaluation and potentially refine the algorithm accordingly. MDR essentially mandates this by requiring PMCF to update the clinical evaluation continuously, which is highly pertinent for AI where real-world data can surface new issues (like discovery of an edge-case scenario where the AI fails, or gradual performance drift due to changes in input data characteristics over time).

Validity, Performance, and Benefit Considerations: Clinical evaluation of AI software focuses on three pillars, often referred to (in IMDRF and MDCG 2020-1 guidance) as the “three proofs” or types of evidence for software: (a) Scientific Validity (Valid Clinical Association), (b) Technical Performance, and (c) Clinical Performance:

Scientific Validity (Valid Clinical Association): This addresses whether the algorithm’s output is actually clinically relevant to the target condition or outcome. In MDCG 2020-1, this is defined as “the extent to which the software’s output (e.g., a calculated score or prediction), based on the inputs and algorithms, is associated with the targeted clinical condition or physiological state”. For an AI device, the manufacturer must show that there is a sound clinical rationale or scientific evidence linking what the AI measures or predicts to real health outcomes. For example, if an AI predicts the risk of sepsis from vital signs, is that prediction clinically meaningful and grounded in medical science? Demonstrating this may rely on literature (to show that the algorithm’s inputs and approach have a basis in known clinical evidence) or on analytical studies correlating the AI output with known patient statuses. Ensuring scientific validity is part of the clinical evaluation – the CER should discuss how the AI’s function is rooted in accepted scientific and medical principles. If an AI uses a completely novel biomarker or pattern with no precedent, this increases the need for robust clinical investigation to prove the validity of the concept itself.

Technical Performance: This is about the algorithm’s accuracy, reliability, and precision in processing data – essentially verifying that “the software correctly and reliably produces an output from given input data”. For AI, technical performance is often demonstrated through verification and validation testing. This can include testing on curated data sets, cross-validation of the model, stress testing edge cases, etc. For instance, in a clinical evaluation of an AI, the manufacturer might include results from validation studies: Algorithm XYZ was tested on a retrospective dataset of 10,000 images and achieved 95% sensitivity and 92% specificity for detecting Disease A. These results demonstrate technical performance. Such evidence might come from non-clinical studies (using historical data, which could be considered a form of clinical data per MDR if it involves patient records) and forms part of the pre-market clinical evidence. If technical performance is insufficient (say the AI isn’t accurate enough), that would be identified in the clinical evaluation and likely need improvement and re-validation before proceeding – possibly preventing a clinical investigation until the algorithm meets a basic level of performance to be safe for testing on patients. Under ISO 14155 and ethical norms, you wouldn’t want to test a clearly subpar algorithm on patients.

Clinical Performance: This refers to the ultimate effect of the software on clinical outcomes or decision-making – “the ability of the MDSW to yield clinically relevant outputs in accordance with its intended purpose”. In other words, does using the AI device confer the intended clinical benefit? This is often the hardest proof and usually requires clinical investigation or real-world clinical studies. For example, if an AI is intended to help detect cancer earlier, the clinical performance could be demonstrated by a study showing that doctors using the AI detected more cancers (and earlier) than those who did not, without increasing false-positives excessively. Data for clinical performance may come from prospective clinical investigations conducted by the manufacturer, or from post-market studies and user feedback. Manufacturers are encouraged to use existing data if available, but for novel AI devices, often there is no substitute for conducting a clinical study. The clinical evaluation for an AI device must consider clinical performance data – if only analytical/technical validation exists and no data on patient impact, the evaluation will likely conclude that a clinical investigation is needed to obtain evidence of the device’s real-world benefit.

These three aspects are intertwined in the clinical evaluation of AI software. MDCG 2020-1 and IMDRF guidance (IMDRF SaMD Clinical Evaluation) provide a framework for thinking through them. When documenting the CER for an AI device, manufacturers typically need to address how the device was validated: Did they demonstrate the algorithm’s analytical accuracy (technical performance)? Is the algorithm built on a clinically sound premise (scientific validity)? And do they have evidence of improved outcomes or effective clinical use (clinical performance)? If any of these are lacking, that gap often translates into a need for further data collection, be it additional bench testing, retrospective studies, or prospective clinical investigations.

Generalisability and Bias: A specific challenge for AI is ensuring that the trained model performs well across the intended patient population and usage settings. AI algorithms can suffer from bias (e.g., trained mostly on adults, it might not work well in children, or trained on one ethnic group and less accurate on another). The concept of “generalisability” is explicitly mentioned in MDCG 2020-1: “Generalisability refers to the ability of a medical device software to extend the intended performance tested on a specified set of data to the broader intended population.” . In a clinical evaluation, the manufacturer of an AI device should discuss how generalisable the results are – for instance, if all clinical data came from one hospital or one country, is that representative of the broader EU patient population? If not, the evaluation might identify a need for either more diverse data (perhaps as part of a new clinical investigation in varied settings) or a cautious approach in labeling (limiting the intended user population until more data is gathered). Post-market surveillance plays a big role here: once the AI device is on the market, the manufacturer should actively collect data on performance in different subgroups and update the clinical evaluation. If any performance issues emerge (say the AI is less accurate for a certain demographic), the manufacturer might need to take corrective actions or update the model. Regulators will expect the CER to be kept current with such findings.

Handling Algorithm Updates: Because AI software might be updated frequently (to fix bugs or even to improve performance), each significant update triggers the question: does this update alter the clinical performance or safety profile? MDR requires that when a device is significantly changed, the conformity assessment may need to be redone. Thus, manufacturers of AI devices often implement a change management protocol that includes an update to the clinical evaluation for each new software version. For example, if an AI imaging software releases a new version with an expanded capability (detecting an additional disease), the manufacturer should update the clinical evaluation: include any new validation data for that version, assess whether the existing clinical evidence is still applicable or if new clinical studies are required, and possibly perform a targeted clinical investigation for the new indication. The clinical evaluation before deployment of the update would justify whether the change can be introduced safely (perhaps under PMCF monitoring) or whether regulatory approval is needed prior to release.

Regulatory Guidance for AI: As of 2025, MDR does not have AI-specific rules separate from general device requirements, but guidances like MDCG 2020-1 (software clinical evaluation) and MDCG 2019-11 (qualification and classification of software) provide insight. Additionally, the EU is developing an AI Act (a separate regulatory framework) which classifies medical AI as high-risk, but that will work in tandem with MDR. For now, manufacturers should follow MDR’s existing provisions: demonstrate compliance with GSPRs via sufficient clinical evidence, just as for any device. If the AI is software, MDCG 2020-1 is particularly relevant; it emphasizes that software (including AI) must be clinically evaluated with the same principles as other devices, albeit with adaptations for the software context. For example, MDCG 2020-1 points out the need to consider the state of the art of algorithms, and to use “iterative” validation approaches when algorithms improve over time. It also underscores the importance of transparency in algorithm logic and outputs as part of the clinical evaluation (so that clinicians can trust and effectively use the AI’s results – an aspect that ties into human factors but also to effective performance).

From the perspective of clinical investigation, running a trial for an AI device might involve unique design choices. Often, AI diagnostic tools are tested in a reader study (where clinicians make diagnoses with and without the AI assistance). Another approach is an outcomes study (randomizing patients to care with AI versus standard care to see if outcomes differ). These study designs must be carefully chosen based on what aspect of performance needs evidence. ISO 14155 applies equally – ensuring data quality and patient safety. One interesting aspect is that some AI devices can be tested with retrospective data instead of prospective enrollment (for instance, using stored medical images to measure the AI’s detection rate). Strictly speaking, retrospective analyses of existing patient data can provide valid clinical evidence and might be acceptable as part of a clinical evaluation without a new interventional study, especially if patient outcomes are not being altered. However, if an AI’s effect on clinical decision-making or outcomes needs to be proven, a prospective clinical investigation is typically required. Manufacturers should engage early with Notified Bodies to agree on what form of clinical evidence is sufficient for an AI device – sometimes a well-designed retrospective study can be part of the clinical evidence package, but if regulators are not convinced, a prospective trial will be needed.

Summary for AI Devices: In summary, AI-embedded devices must fulfill the same regulatory expectations for clinical evaluation and, if needed, clinical investigations. The continuous nature of clinical evaluation is especially important given AI algorithms can evolve and their real-world performance might only be fully understood with extensive data. Manufacturers should ensure their clinical evaluation addresses algorithm validity, technical accuracy, and clinical benefit, drawing on guidance like MDCG 2020-1. If gaps exist (and for novel AI, gaps often do exist, especially in demonstrating clinical benefit), a well-structured clinical investigation under ISO 14155 GCP is required to gather that evidence. All the while, post-market data collection and vigilance are crucial – AI devices may require more frequent updates to the CER (possibly every few months for fast-learning systems) compared to traditional devices. By maintaining a robust, up-to-date clinical evaluation and conducting clinical investigations when appropriate, manufacturers can provide regulators and users confidence that even as an AI system adapts or encounters new scenarios, its safety and performance remain under careful oversight.

Final Thoughts

Clinical evaluation and clinical investigation are complementary components of the EU MDR compliance strategy for medical devices, including those with AI components. The clinical evaluation is a foundational, continuous process that every manufacturer must perform to demonstrate their device’s safety, performance, and clinical benefit based on available evidence. It is an ongoing requirement that endures through the device’s market life, ensured by mechanisms like PMCF. The clinical investigation, on the other hand, is one powerful means of obtaining clinical data – essentially a tool that feeds the clinical evaluation. Governed by ISO 14155 and ethical regulations, clinical investigations provide high-quality evidence when existing data are insufficient. MDR reinforces that a clinical evaluation should precede and guide the need for any investigation, ensuring that new studies are justified and targeted to unanswered questions. Especially for AI-embedded medical devices, where technology evolves rapidly and behavior can be complex, following a rigorous clinical evaluation process is vital to identify what evidence is needed, and conducting well-designed clinical investigations is often the key to gathering trustable data on the algorithm’s real-world performance.

In a regulatory-style perspective, one could say: the MDR requires that no device (AI or otherwise) reaches patients without a documented, up-to-date clinical evaluation demonstrating compliance with safety and performance requirements. If that evaluation finds any shortfall in evidence, the manufacturer must proactively fill the gap – frequently by undertaking a clinical investigation. This iterative loop (evaluate -> investigate -> re-evaluate -> monitor -> update) embodies the MDR’s life-cycle approach to clinical evidence and patient safety. By adhering to these processes, and leveraging guidance from MDCG and MEDDEV along with international standards, innovators in AI medical technology can achieve regulatory compliance and ensure that their AI-driven devices deliver on their promise of clinical benefit while upholding the highest standards of safety and effectiveness. The end result is a robust, evidence-backed conformity assessment that satisfies regulators, Notified Bodies, and provides confidence to healthcare professionals and patients in the use of AI-enabled medical devices.

PS: For more information, subscribe to my newsletter and get access to exclusive content, private insights, and expert guidance on MDR compliance and CE marking: Subscribe Here

✌️ Peace,

Hatem Rabeh, MD, MSc Ing

Your Clinical Evaluation Expert & Partner

Follow me for more insights and practical advice!