AI in Medical Device Software: EU Definition, Guidance, and Clinical Evaluation Implications

The EU AI Act’s Definition of “AI System”

One cornerstone of the EU’s Artificial Intelligence Act (AIA) is how it defines Artificial Intelligence (AI). According to Article 3 of the AI Act, an “AI system” is:

“a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.”

This legal definition is quite broad. It highlights several key attributes of AI systems under EU law:

Machine-based and Autonomous: The system can be software (or a combination of software and hardware) that operates with some degree of autonomy (i.e. it doesn’t need constant human control).

Adaptiveness: The system may learn or evolve after deployment. (Not all AI must be adaptive, but the definition allows for systems that improve or change based on new data – a nod to machine learning.)

Inferencing from Inputs: The AI processes inputs and infers how to produce outputs (e.g. making predictions or decisions) without hard-coded outputs for every scenario. In other words, it uses algorithms or models to derive outcomes from data.

Outputs That Influence Environments: The outputs (whether a recommendation to a doctor or an automated decision in software) can affect physical or virtual environments. This could mean influencing real-world actions (like adjusting a medical treatment) or virtual outcomes (like flagging an image for review).

Crucially, this definition doesn’t just list specific techniques, it’s technology-neutral. It captures systems using machine learning, knowledge-based systems, or other algorithmic approaches as long as they meet the above criteria. In fact, the European Commission’s guidelines on this definition clarify that traditional or simplistic software without “learning” or complex inference would not count as AI under the Act. For example, a simple formula or fixed threshold (like a basic risk calculator using linear regression without any adaptive capability) is not considered an AI system in this context. This helps distinguish advanced AI-driven software from conventional software: if your medical device software uses complex decision-making or learning algorithms, it likely falls under this AI definition.

Regulatory Guidance: How AI is Understood in Medical Devices

With the AI Act’s broad definition in mind, how do EU regulators apply it to medical device software? Several official bodies – including the European Commission, the Medical Device Coordination Group (MDCG), and the newly formed AI regulatory board – have issued guidance to clarify the intersection of AI and medical device regulations. Here are the key points from that guidance:

“Medical Device AI” (MDAI): Regulators often use the term MDAI to refer to AI systems intended for medical use. This covers AI-based software that is a medical device itself, as well as AI components within devices. Notably, it also extends to in vitro diagnostic (IVD) software and device accessories that incorporate AI. In short, if AI is used in a healthcare product regulated by the MDR or IVDR, it’s considered MDAI for regulatory discussions.

Complementary Frameworks : MDR/IVDR and AIA: The Medical Devices Regulation (MDR) and In Vitro Diagnostic Regulation (IVDR) already impose strict requirements on software, but they did not explicitly address AI-specific risks. The new AI Act fills that gap. According to joint MDCG and AI Board guidance, the AIA “complements the MDR/IVDR by introducing requirements to address hazards and risks for health, safety and fundamental rights specific to AI systems.” These frameworks are meant to apply simultaneously and in a complementary way for medical devices that include AIhealth.ec.europa.eu. In practice, this means an AI-driven medical device must comply with MDR/IVDR (for safety, performance, and clinical evidence) and also meet additional AI Act requirements (for example, on data governance, transparency, and robustness of the AI algorithm). The two sets of rules are designed to mesh together rather than conflict.

EU Commission’s Definition Guidelines: In February 2025, the European Commission published guidelines on the AI system definition to help manufacturers determine if their software counts as an AI system. These guidelines (which are non-binding but highly informative) reiterate the points in the legal definition and provide examples. They emphasize that an AI system usually exhibits some form of autonomy and inference, and they confirm that systems using advanced techniques like machine learning, neural networks, or knowledge-based logic are within scope. On the other hand, purely deterministic software that lacks any learning or complex decision mechanism might be outside the AI Act’s scope. The goal is to ensure companies know when the AI Act applies to their product. (The Commission plans to update these guidelines as AI technology evolves.)

When is Medical AI “High-Risk”? A central concept of the AI Act is “high-risk AI systems,” which face the strictest requirements. Most medical AI software will fall into this category by design. The AI Act itself classifies AI systems as high-risk if they are either safety components of regulated products or are themselves products in certain sectors and they undergo third-party assessment under sectoral law. In the healthcare context, the guidance makes this concrete: an AI-based medical device is high-risk under the AI Act if it (1) is intended to function as a medical device (or as a safety component of one) and (2) is subject to a notified body conformity assessment under the MDR/IVDR(2). In simpler terms, if your AI software is a medical device that isn’t Class I (i.e. it requires a notified body review, as most diagnostic or therapeutic software does), then it will be considered high-risk AI. This triggers a suite of obligations from the AI Act on top of the MDR/IVDR requirements.

Areas of Overlap – and New Obligations: Many requirements of the AI Act align with existing MDR principles, but some add new dimensions:

Risk Management & Post-Market Monitoring: MDR already mandates risk management and post-market surveillance for devices. The AI Act builds on this with continuous risk management specific to AI, including monitoring for new AI-related risks (e.g. performance drift or evolving safety issues as the model encounters new data)medium.commedium.com.

Data and Bias Control: The MDR (and the MDCG’s guidance) stress that clinical data for device evaluation must be robust and representative of the intended patient population. The AI Act reinforces this with explicit requirements on data governance (Article 10 AIA), for instance, training data should be relevant, free of unacceptable bias, and of high quality to ensure the AI’s outputs are sound. Manufacturers of medical AI need to document how they handle data, from training and validation through to real-world use, to minimize bias and errors.

Transparency and Information to Users: Both MDR and the AI Act demand transparency about how an AI device operates. The AI Act requires that users are informed they are interacting with an AI system (unless it’s obvious) and that clear instructions for use are provided. MDR’s General Safety and Performance Requirements (GSPRs) similarly insist on clear information, including the device’s intended purpose, limitations, and proper use. For AI, this translates to explaining the algorithm’s role in decision-making, its intended medical context, and any important limitations (e.g. “Not validated in children under 12” or “Performance may be reduced with low-quality images”). Regulators want AI to be explainable and not a “black box” to those using it in healthcare.

Human Oversight: The AI Act introduces the concept of human oversight for high-risk AI. In a medical device setting, this complements MDR requirements for usability and risk control. Manufacturers should design AI devices such that healthcare professionals (or end-users) can interpret and, if needed, override or mitigate the AI’s decisions. Think of an AI diagnostic aid – the clinician should remain in the loop and able to disagree with or verify the AI’s recommendation, as appropriate.

In summary, EU guidance envisions a harmonized approach: the MDR/IVDR ensures the baseline safety and performance of medical devices (including software), while the AI Act imposes additional guardrails specific to AI’s unique challenges (like algorithmic bias, transparency, and continuous learning). Companies developing AI-driven medical software should closely follow both sets of rules and the related guidances (MDCG 2019-11 on software qualification, MDCG 2020-1 on clinical evaluation of software, and the new 2025 joint guidance) to ensure compliance. The end result sought by regulators is safe, effective, and trustworthy AI in healthcare.

Practical Implications for Clinical Evaluation Documentation

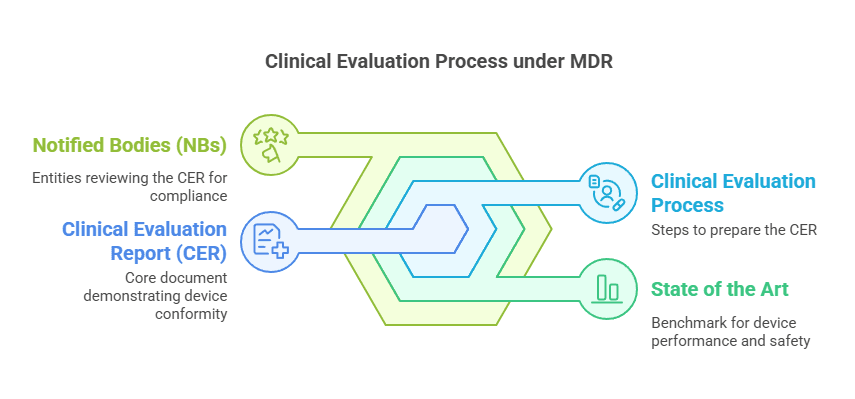

So, what do these definitions and regulatory expectations mean for a manufacturer preparing Clinical Evaluation documents for an AI-based software medical device? In EU medical device regulation, clinical evaluation is documented primarily through the State of the Art (SOTA) analysis, the Clinical Evaluation Plan (CEP), and the Clinical Evaluation Report (CER). Let’s break down the practical implications for each:

State of the Art (SOTA): The SOTA section of your clinical evaluation sets the context for how your device compares to current medical practice and technology. If your software uses AI, the SOTA needs to reflect the latest in both the clinical field and AI technology. Practically, this means documenting current scientific and technical knowledge about AI in your device’s domain. For example, you would include an overview of existing AI solutions or algorithms in that medical field, relevant clinical guidelines or standards for AI (if any), and known limitations or concerns (such as common bias issues or performance benchmarks from literature). Regulators expect that you justify your algorithm’s design and performance against the backdrop of today’s knowledge. Moreover, since the MDR requires software to be developed and managed according to the “generally acknowledged state of the art” (see GSPR 14.2(h) in MDR Annex I), your SOTA should cite relevant standards or best practices for AI (e.g. machine learning development standards, Good Machine Learning Practice principles, data management standards). In short, a thorough SOTA shows that you understand where your AI stands in the evolving landscape and that you’ve considered current benchmarks for safety and performance.

Clinical Evaluation Plan (CEP): When your product involves AI, the clinical evaluation plan must account for the specific ways you’ll assess the AI’s safety and performance. Key considerations to incorporate:

Performance Metrics: Define what clinical performance means for the AI (accuracy, sensitivity, specificity, ROC AUC, etc. for a diagnostic AI; or perhaps treatment outcome measures for a decision-support AI). The plan should outline how you will collect evidence for these metrics.

Representative Data: Ensure the plan requires testing the AI on data representative of the intended patient population and use conditions. Because AI can behave differently on different data, the CEP should include strategies for validating the model on various subgroups (for example, different demographic groups, imaging from different hospitals, etc.) to demonstrate generalizability.

Bias and Robustness Checks: The plan should include methods to check for unwanted bias in the AI’s outputs. For instance, will you analyze performance by age, sex, or ethnicity to ensure no group is underserved? Also, outline how you will test the AI’s robustness to real-world variations (like poorer image quality, noise, edge cases). This ties in with the AI Act’s emphasis on data governance and bias mitigation.

Clinical Validation Strategy: If the AI is novel, you might plan prospective clinical studies or pilot programs. The CEP should mention if a clinical investigation is needed (e.g. to collect clinical data on how the AI performs in practice) or if the evaluation will rely on existing datasets and literature. Keep in mind that if a prospective study is done, under the AI Act it could be considered part of real-world testing of the AI system, which has its own documentation requirements.

Post-Market Follow-up: Plan how you will continue to gather evidence after the device is on the market. Given AI systems can evolve or face drift in performance, your post-market clinical follow-up plan (PMCF, as part of the CEP or a separate plan) should describe how you will monitor the AI’s real-world performance and what metrics will trigger updates or improvements.

Essentially, the CEP for an AI medical device must be comprehensive and proactive, anticipating how to demonstrate the algorithm’s clinical value and manage its risks throughout the device lifecycle.

Clinical Evaluation Report (CER): The CER is where all the evidence comes together to prove your AI-based device is safe and performs as intended, yielding a positive benefit/risk profile. In the CER for an AI device, expect to:

Summarize AI-Specific Evidence: Present the results of validation studies, including both technical validation (algorithm accuracy against reference standards) and clinical validation (impact on patient outcomes or clinical decisions). For instance, if your AI detects a condition from medical images, the CER would include study data comparing the AI’s detection rate and false-alarm rate to clinicians or to an established gold standard.

Discuss Adaptiveness and Training Data: Since the AI Act cares about training data quality, your CER should describe the data used to train and test the model. Include details like the size of datasets, their sources, and why they are representative. If the AI “learns” on the fly (online learning or periodic model updates), explain how you verified that this adaptiveness does not compromise safety over time. Manufacturers often “lock” an AI model before seeking CE marking (meaning the algorithm weights are fixed during evaluation); if that’s the case, document it, and outline the procedure for any future model updates (which might need new validation and maybe even a new conformity assessment if it’s a “substantial modification” under the AI Act).

Benefit-Risk Analysis: Just like any medical device, clearly analyze the clinical benefits versus risks. For AI, benefits could include improved diagnosis speed or accuracy, while risks could include false results or misinterpretation. The CER should honestly appraise any known limitations of the AI (for example, “the algorithm has reduced sensitivity in very low-light images” or “performance drops if the input data deviates from the training set’s characteristics”) and describe risk mitigations in place. Regulators will look for acknowledgment of state-of-the-art performance levels – if your AI is slightly less accurate than an average human professional but still within an acceptable range, say so and justify why it’s beneficial in the workflow (e.g. it may not beat a doctor, but it never gets tired and can be a second reader).

Compliance with GSPRs and AI Requirements: The CER should cross-reference how the evidence shows compliance with relevant general requirements. This includes MDR’s requirements (like clinical performance, safety, acceptability of side-effects) and the AI Act’s requirements (like accuracy, robustness, and cybersecurity for high-risk AI). Expect that notified bodies (under MDR) and possibly AI compliance experts will review the CER to ensure that claims about the algorithm’s performance are backed by data and that all risks (including AI-specific ones) are addressed with evidence.

In practice, preparing these documents for an AI-driven device often means interdisciplinary work: you need clinical experts, data scientists, and regulatory specialists collaborating. A few practical tips:

Keep documentation of your model development and validation – this technical evidence often feeds directly into the clinical evaluation.

Use tables and graphs in the CER to make AI performance metrics clear (e.g. a table of sensitivity/specificity in different subgroups, or a calibration curve if relevant). Clarity is part of transparency.

Be prepared to update the clinical evaluation as the AI model or the state of the art evolves. The SOTA, in particular, might need refreshing more frequently in fast-moving AI fields.

Examples: AI in Action for Medical Devices

To illustrate how all of this comes together, let’s look at a few practical examples of AI-based medical device software and consider how the definition and guidance apply:

Example 1: AI for X-ray Diagnostics – Imagine a software that uses a machine learning model to detect signs of pneumonia on chest X-rays. This clearly fits the AI Act’s definition: it’s a machine-based system that infers from image data whether certain features (patterns of lung opacities) are present, then outputs a prediction (e.g. “likely pneumonia” or a probability score). Under the MDR, this software qualifies as a medical device (it provides information for diagnosis) and would likely be classified as at least class IIa or IIb (since a missed pneumonia could significantly influence patient treatment). Because it requires notified body review, it’s a high-risk AI system under the AI Act. In developing this product, the manufacturer’s State of the Art research would cover current methods of pneumonia detection (human radiologist performance, any other AI tools on the market, clinical guidelines for pneumonia diagnosis). The Clinical Evaluation Plan would outline how to validate the AI – for example, using a retrospective study on X-ray images with known outcomes to measure the algorithm’s sensitivity and specificity, and perhaps a reader study where radiologists use the AI’s assistance. They would plan to check the AI on different demographics (adult vs pediatric X-rays) to ensure it works across the intended population. In the CER, the manufacturer would present results showing, say, that the AI can detect pneumonia with 95% sensitivity and 90% specificity, improving upon average radiologist performance in certain settings. They would address potential risks like false negatives (e.g. how often did it miss pneumonia that a doctor caught, and what’s the clinical impact?). They’d also include usage guidance: perhaps the AI highlights areas of interest on the X-ray as an aid, but the final diagnosis is confirmed by a clinician (providing human oversight). The transparency requirement means the user manual and even the on-screen interface should make it clear that this is an AI-generated suggestion, not a definitive diagnosis – enabling the radiologist to understand the tool’s role.

Example 2: Personalized Insulin Dosing Advisor – Consider a smartphone app that uses an AI algorithm to recommend insulin dose adjustments for diabetic patients based on their blood glucose readings, diet, and activity data. This software acts autonomously to a degree (analyzing data and giving dose advice) and can adapt (it learns an individual’s insulin response over time). It is thus an AI system under the EU definition. As a medical device, it likely falls under class IIb (advising on dosing of a medicine). With that classification, it’s again a high-risk AI under the AIA. For this product, clinical evaluation must be rigorous: the CEP would include plans for clinical studies where the AI’s dosing recommendations are tested (perhaps in a controlled setting comparing outcomes or glucose control for patients using the AI vs standard care). Special attention must be paid to safety – the app must not recommend dangerous insulin overdoses or under-doses. The CER would compile evidence such as: simulation results showing the AI’s recommendations stay within safe bounds, user studies demonstrating that with AI assistance patients spent more time in the target blood glucose range, etc. The documentation would also need to address AI-specific points: how the training data included a wide range of patient profiles (to avoid bias towards, say, only adults or only a certain diet), and how the algorithm’s adaptiveness is controlled. For instance, the manufacturer might “lock” the model for each patient for a period of time and only update it with new data after verifying the update won’t cause erratic advice – this process would be explained in the technical file and summarized in the clinical evaluation. Transparency and user instruction are vital here: patients (or clinicians) must be informed that an AI is analyzing their data and providing advice, with clear warnings that they should double-check suggestions and what to do if a suggestion seems off. The AI Act’s human oversight principle would encourage the design of the app to include, for example, a feature where the patient can indicate if they followed the advice and whether the result was as expected, thus keeping a human in the loop and gathering feedback.

Example 3: Pathology Slide Analysis Tool – Take a software that uses AI to analyze digital pathology slides (images of tissue biopsies) to identify cancerous cells and quantify tumor markers. This AI aids pathologists by marking suspicious areas and even providing automated counts (e.g. “80% of cells in this region are positive for the cancer marker”). Under the AI Act, this image analysis algorithm is an AI system: it infers patterns from pixel data to produce diagnostic-relevant information. As a medical device, it would usually be class IIa or IIb (since it influences diagnosis). The State of the Art section would review current pathology workflows and any existing AI or computer-assisted tools, noting, for example, that pathologists typically review slides manually which can be time-consuming and somewhat subjective, and that AI could improve consistency. It would also summarize any literature on using AI in pathology (a growing field). The Clinical Evaluation Plan might include retrospective validation (comparing the AI’s analysis with expert pathologist readings on a large set of historical slides) and a prospective phase (where pathologists use the tool in practice and outcomes are tracked). If the AI highlights cancerous regions, one measure of safety/performance is that it does not miss clinically significant findings more often than a human would. The plan would detail how to measure that (e.g. have multiple pathologists review slides with and without AI, measuring sensitivity). In the CER, the manufacturer would report, say, that the AI improves efficiency (pathologists complete cases 20% faster with the tool) while maintaining or improving detection rates of small tumor foci. It would discuss any failures of the AI (perhaps it struggles with very rare tumor subtypes due to limited training data – which would be noted as a limitation). The CER would also cover compliance with both MDR and AI Act requirements: for instance, documenting that the tool’s outputs are validated and that it includes an on-screen notice like “AI-generated suggestions – for confirmation by a pathologist,” fulfilling the user awareness mandate of the AI Acthealth.ec.europa.eu. Post-market, the maker would plan to collect user feedback and diagnostic concordance data to ensure the AI continues to perform as expected in different labs – aligning with both MDR post-market surveillance and AI Act monitoring obligations.

Each of these examples underscores a common theme: using AI in medical devices brings great promise, but also a responsibility to thoroughly assess and document performance in a clinical context. Manufacturers must be diligent in explaining how their AI works, proving it works as intended, and staying vigilant for any issues once it’s deployed in the real world. The EU’s definition and guidance on AI provide a clear framework to do exactly that – ensuring that whether it’s a diagnostic imaging AI, a treatment recommendation app, or any other smart medical software, it earns the trust of both regulators and users through transparency, evidence, and robust design.

FAQ — AI under MDR and the AI Act

Q1 — How can I tell if my software is considered AI under the AI Act?

If your software learns from data or makes predictions, classifications, or decisions from input data without being manually coded for each outcome, it’s probably considered AI.

Simple calculators or fixed rule systems usually don’t count.

Q2 — My AI model is locked and doesn’t keep learning. Does it still count as AI?

Yes. Even if your model doesn’t update itself, it still counts as AI if it uses machine learning or similar inference-based methods. The definition includes systems that can be adaptive, not only those that are.

Q3 — Does the MDR even talk about AI?

No. The MDR never uses the word “AI.”

It only looks at what the software does.

If it provides information used for diagnosis, monitoring, prediction, prognosis, treatment, or alleviation of disease, it is a medical device — and all MDR rules apply.

Q4 — When does my AI get classified as “high-risk” under the AI Act?

Almost always, if it’s a medical device above Class I.

Any AI system that is a medical device and goes through notified body review is considered high-risk under the AI Act.

Q5 — What do I need to add in the SOTA if my software uses AI?

You need to show both:

The clinical state of the art in your target field

The current knowledge about AI in that field (algorithms, benchmarks, limitations, known risks or biases)

Also mention any standards or best practices used to design or validate your AI.

Q6 — How does AI change what I need to write in the CEP?

Your CEP should include:

AI-specific performance metrics and how you’ll measure them

Plans to test your AI on representative populations

Methods to check for bias and performance drift

Human oversight and safety fallback strategies

Post-market follow-up plans for real-world performance

Q7 — What should I add in the CER if my device uses AI?

Make sure your CER:

Describes your training and validation data sources

Shows performance across subgroups

Explains how your AI’s outputs are clinically valid and safe

Discusses risks, limitations, and your benefit-risk balance

References both MDR GSPRs and AI Act requirements

Q8 — What if my AI updates itself after it’s on the market?

This counts as adaptive AI.

Each model update may be treated as a substantial change and can require a new conformity assessment.

You need a clear change management plan and continuous performance monitoring.

Q9 — What happens if I don’t follow the AI Act rules?

Your device might be blocked from the EU market or removed later, even if it passed MDR review.

Reviewers now check for both MDR and AI Act compliance.

Q10 — Do I need to create a second technical file just for the AI Act?

No. You can use one technical file, but it must cover both MDR and AI Act requirements.

Most companies add AI Act sections (data governance, bias control, transparency, human oversight) inside their MDR documentation.

Sources

Regulation (EU) 2024/1689 (EU AI Act) – Article 3 Definition of an AI System.

General Safety and Performance Requirements, MDR Annex I – e.g. GSPR 14.2(h) on state of the art in software development and GSPR 23 on information for users, which align with AI Act transparency obligations.

Final Thoughts

The integration of artificial intelligence into medical device software introduces additional regulatory obligations that extend beyond those required under the MDR. While the MDR establishes the general framework for demonstrating safety, performance, and clinical benefit, the AI Act imposes complementary requirements specifically addressing risks related to data governance, algorithmic performance, transparency, and human oversight.

Manufacturers are expected to incorporate these AI-specific considerations into their technical documentation and clinical evaluation. Early identification of the system’s AI nature and its classification as a high-risk AI system is essential to ensure that the State of the Art analysis, Clinical Evaluation Plan, and Clinical Evaluation Report are developed in a manner that fully reflects both sets of regulatory requirements. This integrated approach supports the generation of robust evidence, facilitates conformity assessment, and strengthens the overall regulatory acceptability of the device.

PS: For more information, subscribe to my newsletter and get access to exclusive content, private insights, and expert guidance on MDR compliance and CE marking: Subscribe Here

✌️ Peace,

Hatem Rabeh, MD, MSc Ing

Your Clinical Evaluation Expert & Partner

Follow me for more insights and practical advice!